Technology

The challenge with AI acceleration is not limited to the hardware, instead we believe software is the biggest challenge. Being able to run any neural network at high utilization (>80%) and low memory usage is the challenge. Feeding the 3-dimensional neural network data using a traditional instruction set makes the compiler intangible in terms of achieving utilization and there by low power and size. CortiCore architecture provides the solution via its unique instruction set that dramatically reduces the compiler complexity. The approach allows us to create a compiler that achieves >80% utilization with 16x reduced memory (compared to currently available solutions) on all neural networks – demonstrated on our FPGA platforms.

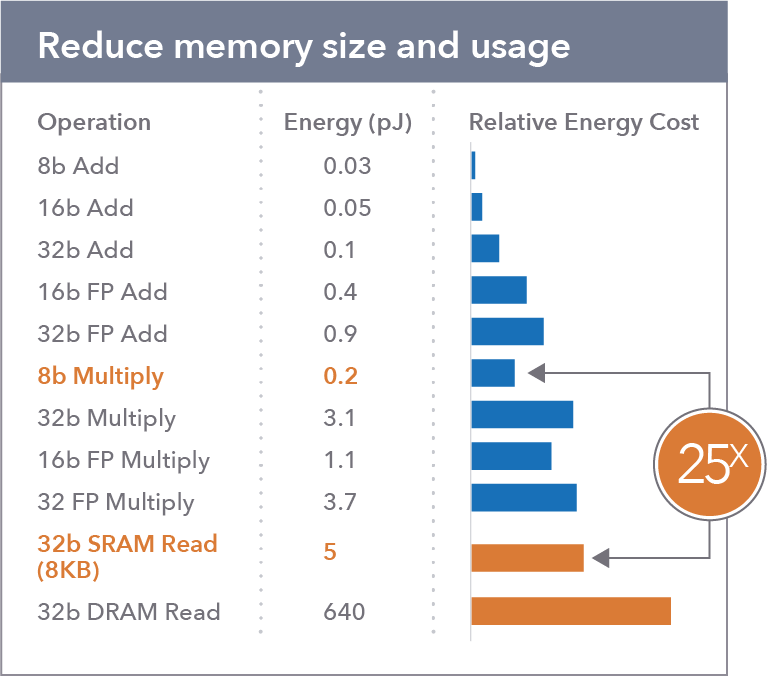

Computing’s Energy Problem and What We Can Do About It

— Mark Horowitz, PhD

As seen in this table, Mark Horowitz, PhD shows how the amount of energy needed for a 32bit SRAM read is 25x more than the energy needed for a 8bit multiply function. CortiCore architecture reduces the non IP system memory access to once per input data and never does any writes to the non-IP memory, inspite of low (256KB) internal memory.

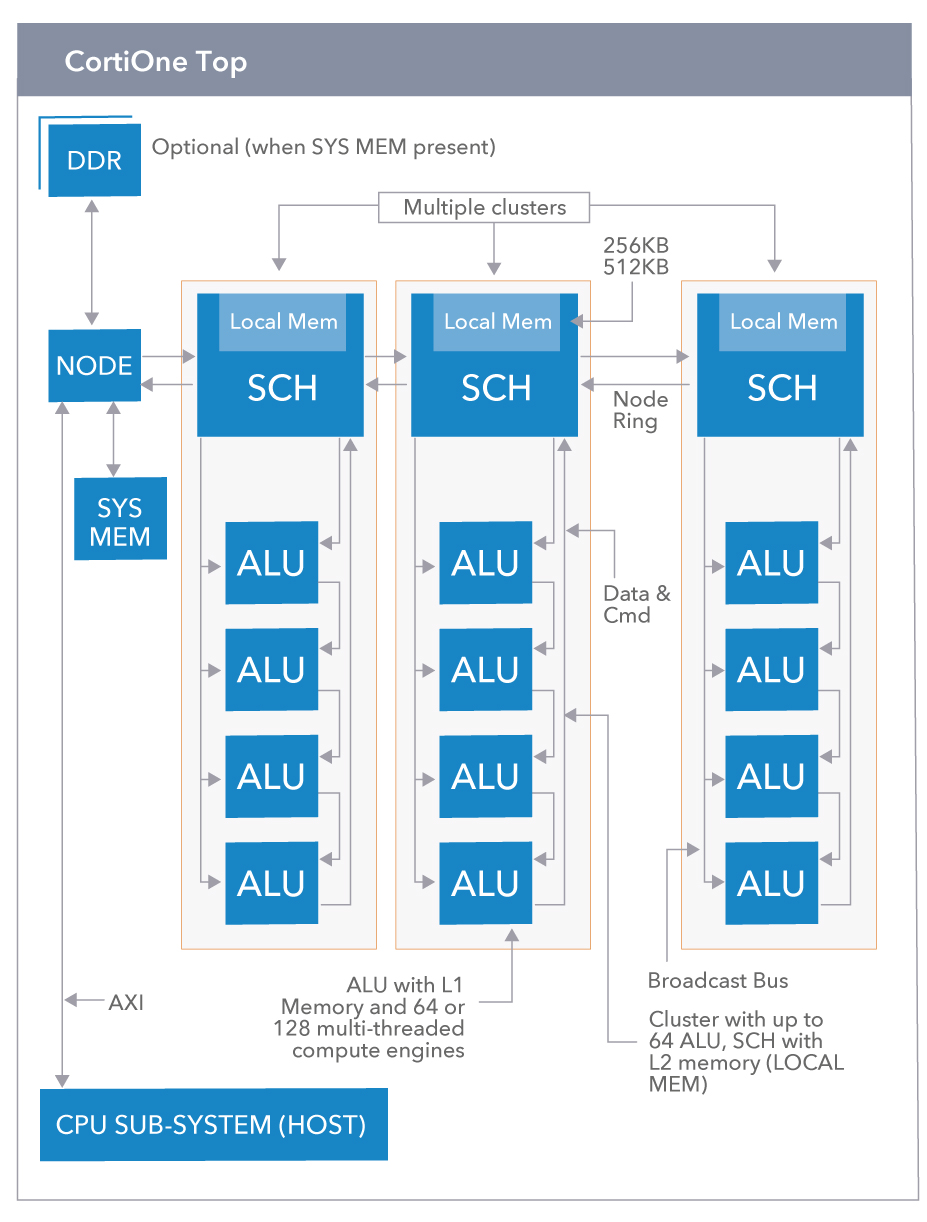

- Scalable RTL via parameters for performance and power

- Number of ALU

- Number of clusters

- Activation memory size per cluster (Local mem –can be 256KB, 512KB typical)

- DDR or No DDR – external memory

- Internal system memory

- External shared memory (optional)