Solution

Processors for EDGE computing suffer from:

-

Having limited processing speed

-

Consuming too much power

-

Poor utilization of silicon

-

Running high clock cycles

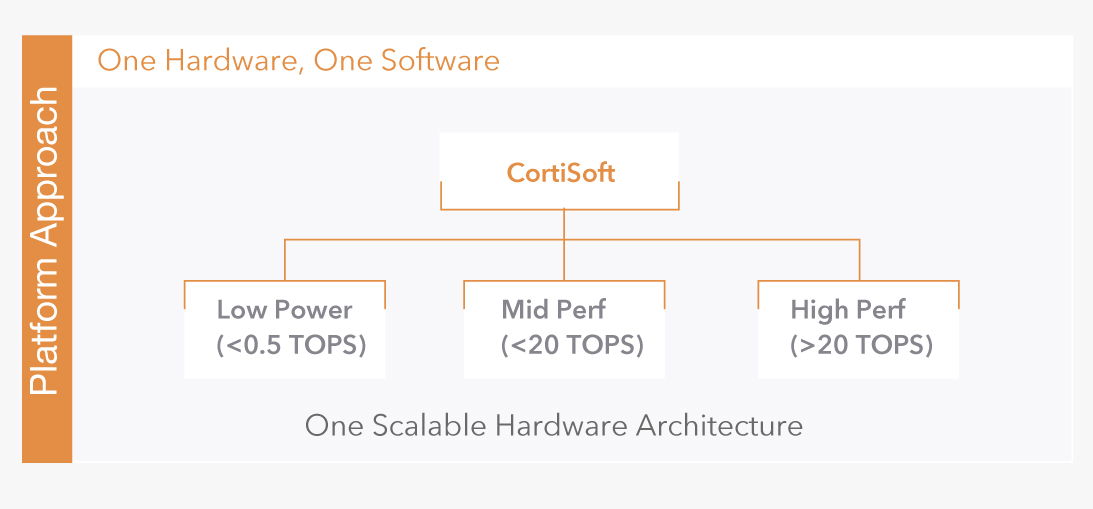

Roviero has developed a natively graph computing processor for edge inference. CortiCore architecture provides the solution via its unique instruction set that dramatically reduces the compiler complexity.

You no longer have to settle for low power vs performance!

Watch five cameras running simultaneously

on our demo system.

Key Features

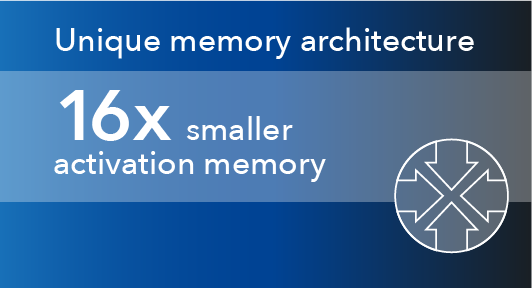

Internal Memory

- Low internal memory requirement (min 256KB)

- flexible tradeoff on performance and memory

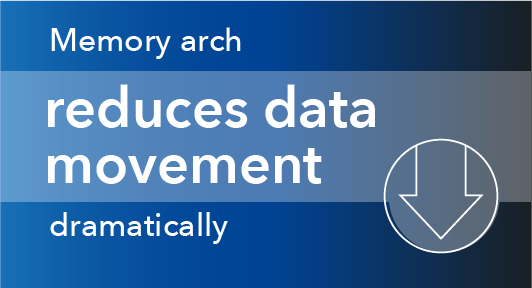

External memory

- Sleeps > 99% of the time

- Low power: access one time per input frame

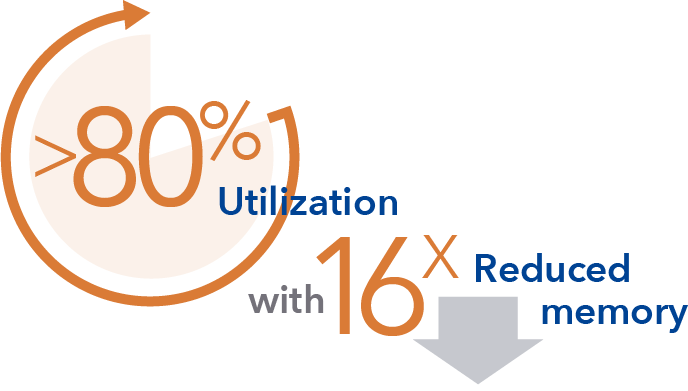

High Utilization

- > 80% utilization for all types of model structures

- Efficiently handle weight-stationary & Datastationar

Power Consumption

- Achieves micro-Watt power when incumbents struggle with milli-Watts

Speed

- Scalable from 0.1TOPS to 100TOPS

- Runs at low clock-cycle- 10-30x better

- Compiler designed to bring up networks efficiently

- Support large input frame without down scaling

Confiquration

- Flexibility to reconfiqure/extend to support current and future application models

Scalability

-

Scalable RTL via parameters for performance and power

-

Number of ALU

-

Number of clusters

-

Activation memory size per cluster

-

DDR or No DDR – external memory

-

Internal system memory

-

External shared memory

-

Hardware confguration input to compiler

Additional Key Features

-

Any frameworks, any NN, any backbone

-

AI optimized instruction set – makes compiler possible

-

AI Data movement and compute-oriented instructions

-

>80% compute utilization

-

Highly parallel design – high performance at low frequency of operation

-

Implements sparse NN efficiently, reducing model size and compute requirement by >3x

-

All digital logic – implement in any process node

-

Very low host code support to run the AI processing job